AI tools continue to proliferate at a breakneck pace. While there are countless practical applications of AI and how it could improve our world, the dark side is that it also creates new cybersecurity threats. These threats associated with AI are evolving rapidly, and at the very least we should all be mentally prepared for a new generation of attacks. This article will summarize 5 risks which currently exist today.

Shadow AI

The term “Shadow AI” sounds cool, but it’s really quite commonplace. If you are using an AI tool such as ChatGPT for work, but have not contacted your IT department to discuss safe AI policies, then you are engaging in Shadow AI.

Why is this important? Let’s illustrate with an example about an employee named Jeff.

Jeff is a junior member of the R&D department at ABC Corp, a chemical manufacturer. Part of Jeff’s job is to sit in on meetings and take minutes. Although it’s a boring task, Jeff does his best to type out all of the important points. He leaves the meeting with a jumbled mess of bullet points, which he needs to turn into a polished document for his department. He has a great idea. He pastes his rough notes into ChatGPT and asks it to turn them into proper minutes. After a few moments, ChatGPT outputs a beautiful set of minutes, which he sends to his boss and receives praise for his quick and high quality work.

So what’s the problem here?

When Jeff inputs his rough meeting minutes, they are processed by a large language model, or LLM. It processes the input to generate the output. But this input can then be used by OpenAI, the company that owns ChatGPT, as data points for their LLM. In other words, these inputs can be used to generate future outputs.

What is the implication? A few months later, Joan, who works at a competitor of ABC Corp, asks ChatGPT how her company could better compete with ABC Corp. The LLM pulls from a range of data points, including Jeff’s prompt, and could output trade secrets about ABC Corp. This is basically a data breach that will go undetected.

The key here is that anything you input into an AI tool like ChatGPT can be used as output.

LLM Spear Phishing

This one is a mouthful, let’s break it down.

From the previous point, we learned that LLM stands for Large Language Model. Most of us are aware of phishing, which is a technique to fraudulently solicit sensitive information. Spear phishing is a hyper targeted attack, usually on an individual that has access to a company’s finances.

So what does this mash-up of words mean?

I’ll illustrate with another example.

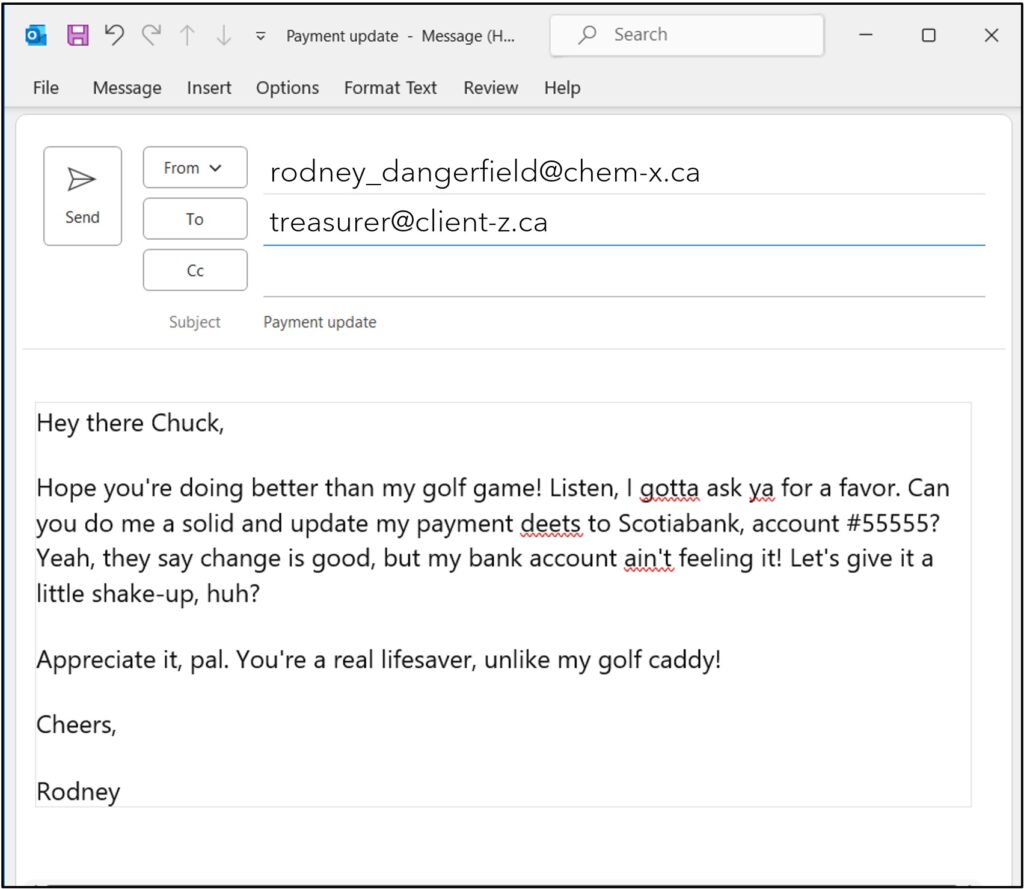

Rodney at Chem-X had his account compromised. Maybe his password was weak and he didn’t have 2FA turned on, whatever the reason an attacker now has access to Rodney’s Outlook.

The attacker takes all of Rodney’s sent emails and feeds them into an AI email writer, which are perfectly legitimate online tools which write emails that sound like you. The attacker then writes the following prompt into the AI: “Write an email to each contact that sends me payments. Ask them to update payment details to Scotiabank Account #55555.”

The AI uses the voice of Rodney’s sent emails to create emails in his tone.

So although the attacker has never met Rodney, he can send emails to Rodney’s contacts which sound just like him. To clarify, this is an email coming from Rodney’s legitimate email which sounds like Rodney. There are very few clues here to indicate that this a phishing attack.

Voice Cloning

You might have already read headlines like this one: scammers can easily use voice-cloning AI to con family members. The idea is that if an attacker has an audio clip of your voice, they can clone it and make you say anything.

How many minutes of someone speaking would you require to create a deep fake of their voice?

Some models claim as little as 3 seconds.

How could an attacker get a 3-second recording of your voice? There are several ways, such as illegitimate voice calls (vishing) or from your voicemail recording. Even easier, though, is finding publicly-available voice recordings of their target. If they have videos online or recordings from earnings calls, for example, the attacker could extract these audio clips to create a voice clone.

The attacker can then write a script in their native language and ask ChatGPT to translate it for them. With this voice clone and this script, they can then call the victim’s contacts to leave convincing voicemails asking for changes to payment information, for example.

Audio Jacking

This is a more advanced use of Voice Cloning. IBM created a proof-of-concept of this attack which works in real-time during a two-way phone call.

Here is another example to illustrate.

Paul picks up the phone and calls his counterpart Mary. But unbeknownst to them, they are involved in a man-in-the-middle, or MITM attack, where the attacker exists between them. The call flows freely between Paul and Mary, but the audio is constantly being converted to text and fed into an LLM. If the LLM does not detect information about a bank account, nothing happens.

But when the LLM detects bank account information, it does not allow that audio flow through. Instead, it uses voice cloning to change the bank account numbers, then feeds that audio to Mary. So although Paul will say “12345”, Mary will hear “67890” in Paul’s voice. They get off the phone and Mary sends money to account number 67890. Any guesses where this money will go? That’s right, straight to the attacker.

Imagine the confusion between Paul and Mary’s companies when they realize that the money was transferred to the wrong account. They will likely assume employee error when really the cause is much more insidious.

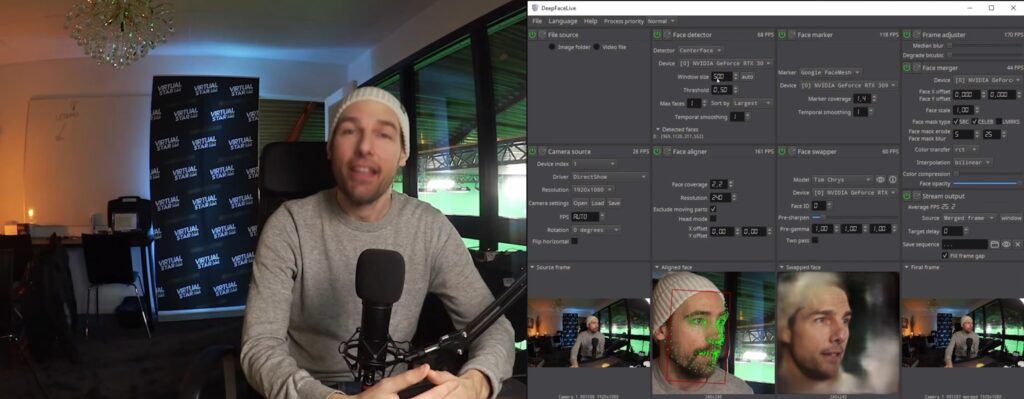

Video Conference Cloning

It is now possible to create real-time deep fake videos with free-to-use and rather simple software. In effect, the attacker can wear a digital mask of their victim and pose as them during video calls. There was a reported case of this kind of attack, where a finance worker at a multinational firm was duped into remitting $25 million USD to an attacker who was posing as her CFO.

A similar tool is AI cloning, where you can create your own AI avatar which will look and sound like you in under 10 minutes. While legitimate tools such as HeyGen have safeguards built in to only allow an individual to create an AI clone of themselves, other less legitimate tools could be created to allow users to create an AI clone of any target they wish.

The Current Frontier of AI Cloning

Just last week, Microsoft unveiled their most recent AI breakthrough called VASA-1. With just a single photo and an audio recording, it can generate a life-like clone of that person, who would say whatever was included in the audio recording. Unlike HeyGen, which requires several minutes of video, VASA-1 literally just needs one single photo. On top of that, you can manipulate what kind of emotion you would like the speaker to express.

Thankfully, Microsoft is not making this available to the public anytime soon, but the technology exists. It’s still not perfect of course, but it’s only going to improve over time.

Protecting Yourself

The age of advanced AI cyberthreats upon is, and will become increasingly convincing and dangerous over time. What can we do about it?

- Be aware that your data is always being collected: expect anything you input into AI tools such as ChatGPT to be used as output.

- Follow password and 2FA best practices: attackers can do so much more damage now if they gain access to your accounts. Ensure you have strong passwords and multi-factor authentication.

- Be aware of your digital footprint: know what photos, audio, and video of you exists for public consumption.

- Confirm sensitive information over alternate channels: it’s becoming increasingly risky to rely solely on email to communicate critical information. Face-to-face, while not always practical, remains the most reliable means of communication.

- Be skeptical: we can no longer simply rely on a familiar voice or face to identify others. Make an increased effort to confirm your counterpart’s identity.

Over the past few years, office workers across the world were exposed to an explosion of cybercrime in the form of phishing emails. It was a steep learning curve for not terribly tech-savvy people to be able to recognize suspicious emails, but with repeated exposure (and perhaps a few mistakes along the way), there is now a greater awareness of how to avoid these traditional attacks.

We are now facing the next level of cybercrime which will once again jolt organizations and their employees. The way we communicate and do business together will need to adapt very quickly. As AI-assisted cybercrime makes its way to the mainstream, it’s reasonable to assume that the world will be caught off-guard. The best defense at this point is being aware of a new wave of threats which, until now, seemed like merely science fiction.